“It is essential to discuss and reflect on artificial intelligence.”

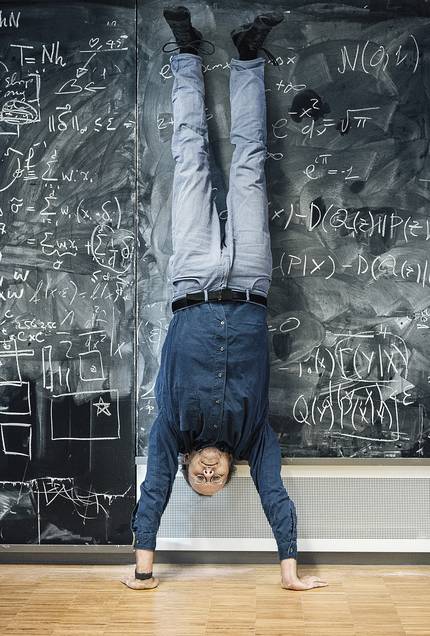

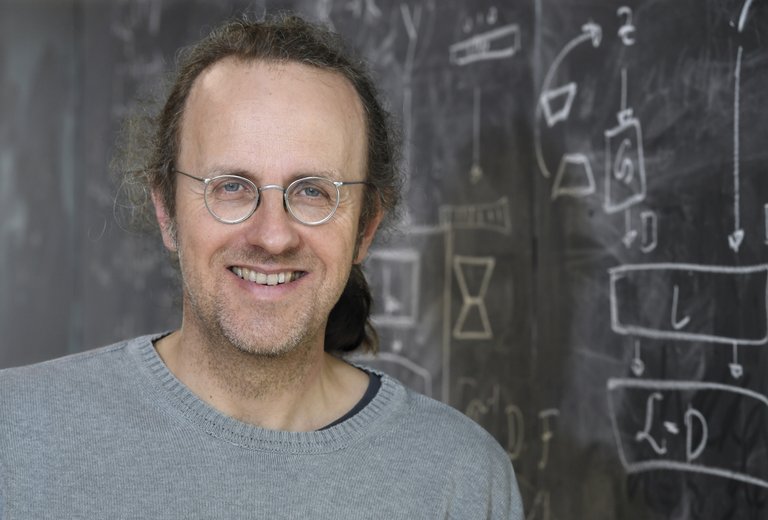

Bernhard Schölkopf is well known in the world of artificial intelligence research. Among others, he is one of the directors of the Max Planck Institute of Intelligent Systems (specifically, head of the Experimental Inference Department), and also works in other centers and organizations. His research has received a lot of resonance and recognition, including one of the BBVA 2020 “Frontiers of Knowledge” awards for the development of kernel methods. The dialogue starts with the question about them; in succession, however, other issues appear: exoplanets, accessibility, sustainability, military research, philosophy, transparency of science and social participation

You are an expert in machine learning; especially in kernel methods or causality. What work have you done in this area?

Kernel methods are a field of machine learning. They are actually methods of learning certain rules, dependencies or relationships from observations, and they are special because they are connected with certain fields of mathematics: functional analysis, optimization theory, etc., thus providing a beautiful and elegant way of learning rules from data that can be used in different fields such as medicine or industry. They are useful not only to know the relationships between the data, but to go further and perceive the causality. It’s a bit deeper than machine learning.

One of the applications may not be the first to come to mind. In fact, you have used it in the detection of exo-plains. How was that?

All right, let's go. I was spending a sabbatical year with my family in New York, and I started talking to astronomers. I was told that they had a problem detecting exoplanets from data collected by the Kepler space telescope. In fact, the detection of exoplanets by passing in front of their star was based on the loss of luminosity of the star. But this loss is very small and it is difficult to distinguish it from the noise generated by the observation itself. This noise and the defects of the telescope, however, can be deduced from the data of other stars, which we took advantage of.

In a few years' time, we found between 20 and 30 exo-specimens, which were subsequently confirmed by other methods. Soon after, it was confirmed that one of them was at a considerable distance from his star. This means that it was at such a distance as to contain liquid water. The first exoplanet to be detected was habitable.

In what other areas can it be applied?

In fact, in all areas where experimental observations can be made. It is necessary, on the one hand, to have a large amount of data, a large number of measurements, and, on the other hand, to have relationships between the different observations. For example, we can relate the measurements of a biomarker to the risk of a tumour and find out whether the biomarker can predict a tumour before it is detected. In other words, a rule is deduced from the data, even when it is difficult for a person to perceive it.

Apart from kernel methods, what do you think will be the main developments of artificial intelligence?

One of the most exciting areas right now is natural language processing (NLP). Twenty or ten years ago, a lot of research was done on computer vision: how to recognize objects, or how to distinguish between cars and pedestrians... for example, to develop automatic cars. In the last 10 years, considerable progress has been made in the study of language and in the prediction of texts. And one day, programs are able to create texts. This has created a sense of wonder and fascination that I believe will be greatly developed in the coming years.

How can we ensure that the benefits of artificial intelligence systems reach everyone?

In order to make the benefits of artificial intelligence systems as accessible as possible, the first step is to publish the research. Not only should the methods be described in a scientific journal, but the code should also be published. Even more so, as well as the data that has been used to train this code.

However, this is not a one-size-fits-all issue. For example, in medicine, data cannot be made public in any way. Or, in some other area, it may not be advisable because of the risk of being used with malicious intent. But in general, if we want it to be beneficial to as many people and societies as possible, I believe that research should be as transparent as possible.

Artificial intelligence can also cause problems in other areas, such as the environment?

It is true that training artificial intelligence programs requires an enormous amount of energy that consumes a lot of resources. And research centers aren’t always in the right place from a sustainability perspective either.

But from another point of view, artificial intelligence can also have a beneficial effect on the environment. In fact, the climate is a complex system for which we do not have a complete model. By using artificial intelligence, we can also have a better understanding of the climate, which will help us to stop or mitigate climate change. It will also help us to create better technologies: better solar cells, better electric batteries

I read that you refused to participate in military investigations. Is that how it is?

Oh, yeah, yeah. Traditionally, in wars, there is a human being involved. Someone decides to attack the other, or shoot; someone orders and it's someone's decision. This means that there are responsibilities and moral judgments.

War is always bad. But if machines become more and more intelligent, and they are given the responsibility to make these decisions, who is responsible? The one who developed the program to meet and kill a person? The one who designed it? The one who sold it? The government that uses the system? It's hard to predict how this can change the war.

I believe that this must be discussed and that this is an issue that needs to be examined very carefully. But I’m very skeptical; I don’t see that we’re doing it. Therefore, I always try to support and encourage those who say that such weapons should be banned.

On the other hand, you have studied philosophy. Do you think it is useful, or even necessary, to take account of philosophy in the development of artificial intelligence?

Without a doubt, philosophy has been very useful. I think anything you learn can be useful at some point. And if you've studied philosophy, it will affect the way you think about the problems of artificial intelligence. Of course, many technicians only deal with the technical aspects of artificial intelligence. But the questions we want to clarify are not only technical; that is, how do we detect the structure of the world? Why does the world seem to be subject to laws and not to chance? In my opinion, these kinds of questions are deeply philosophical, and we must understand them from this perspective.

What other disciplines should be considered?

After all, artificial intelligence will influence everything, so all aspects should be considered, from natural sciences to art. But the economy, the politology, the society... In fact, more debate is needed in society.

What do you think of the demands that are being created to stop the investigation for a period of time?

As I have just said, I think it is necessary to discuss and reflect on the development and consequences of artificial intelligence. But these moratoriums are unrealistic; it will not happen. It is significant that behind one of the petitions is Elon Musk. The fact is that there is competition between companies, and those who follow it take time to catch their predecessors.

It is much more important than that to understand how these systems work, to analyze the consequences they may have, and to see how we can ensure that they are used for good. And this has to be done with the people; you can’t leave everything in the hands of the companies.

What is now unthinkable about artificial intelligence in the future?

This question cannot be answered. It is unpredictable what will happen. Even when the Internet was created, it was impossible to know how we would use it today and what it would bring us. Nowadays, we have systems capable of creating poems. There is a leap there, and in the future the conversation between machines and people will become more and more common.

We have to think about how to consolidate society so that it is aware of the good and bad use of artificial intelligence.

Buletina

Bidali zure helbide elektronikoa eta jaso asteroko buletina zure sarrera-ontzian