Programming neurons

2007/12/01 Roa Zubia, Guillermo - Elhuyar Zientzia Iturria: Elhuyar aldizkaria

Twelve years ago, in 1995, a computer-driven car crossed the United States. Total 9,600 kilometers. No hands across America is the name of the adventure, as the goal was to drive without hands. The car was not without driver, as a person was speeding up and braking. However, by eliminating both, everything else was done by the navigation platform called PANS (Portable Avanced Navigation Suport), a set of computers, GPS and other systems. Since then, the world of driverless robotic cars has advanced greatly, but not so much at the software level. Today's robotic cars use software similar to the PANS system, programs that allow computers to simulate the functioning of a brain.

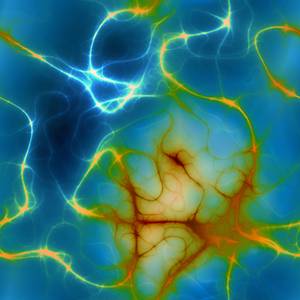

The brain works with neurons connecting millions of small units. Separately, neurons are not very powerful, receive small electrical signals, change them slightly and send them. But, in teams, neurons are very powerful, because these small and simple units are organized in a large and sophisticated network.

Each neuron receives signals from thousands of other neurons. He decides whether to send the signal forward, and if the answer is yes, he places it in the signal and sends it to thousands of other neurons. This operation gives an enormous capacity to the neural network, which includes abstract works.

Computer neurons

The neural network can be simulated by a computer. There are many small calculation units that can be defined and connected between them. By providing data to some of them you can start the network and get the result of the work through the output neurons. In fact, computers call neural networks computer programs that work this way and classify them into artificial intelligence. Neural networks are not the only way to create artificial intelligence, but they are one of the most important.

In most definitions of artificial intelligence verbs appear like arguing, reasoning, interpreting, learning. The question is how a computer can do these things. Of course, it makes brains through millions of neurons and connections. But designing such a system on the computer is impossible, although the number of simulated neurons is much lower. For example, what does each neuron have to do inside the net to know that a peasant robot is time to collect tomatoes? Nobody knows the answer, but that doesn't matter. And it is that a neuronal computer network does not know how the work is done. He has to learn.

The programmer gives the network an initial structure that integrates it in a training phase. For this it gives you a problem and, together with the approach of the problem, the correct solution thereof. The network analyzes the problem and provides a solution that contrasts with that provided by the computer and adapts the activity of each neuron to correctly adjust the solution that gets the entire network. Try again, if the program is well done, in the second session the network solution and the correct solution will approach. It will recompare both solutions, recondition the work of neurons and try again. In this way, until you get a correct solution.

This learning system seems like a trap, as it is given the solution it should get. But the brain does the same. Human beings, for example, learn to read or know a face that way: over and over again after seeing the solution. Knowledge of faces is a good example; newborn children spend months learning to know the faces of their parents, for which a minimum level of abstraction is required. And if the father or mother of a six-month-old boy changes appearance (haircut, for example), it is possible that the child may have great difficulty knowing his face.

Input and output

To make the network learn, in short, you have to get started. Give it an input, enter data. These data do not reach all neurons directly, they are taken, treated and transmitted by a few to the neurons to which they are connected. The data is transmitted in neuron as an electrical signal until it reaches the output neurons. These last neurons provide an output.

Input and output; the old dogma of computing. Both are necessary. They are essential components of the neural network. The simplest possible network would have a single neuron that would collect a simple input and, transformed, give a simple output. To treat a single neuron and a single data. Such a network would not learn much. Even to learn to perform simple logical operations, a network needs a few neurons.

In the operation called XOR, for example, two data, zeros or one are included; when the two data are unitary, the result of the operation is also one, and if it has any zero, the result of the operation is zero. This is a very simple operation, but it takes five neurons for a network to learn how to do it: two neurons to collect input data, one for the final output and two intermediate neurons.

Layers

Intermediate neurons are necessary for intermediate jobs. And if all these intermediate jobs are not similar, each work is done by a group of neurons. Therefore, computer scientists organize them by layers. In the first layer are the neurons that receive the input, then the first intermediate layer is found when necessary, then all the next intermediate layers in order and finally the neuronal layer that gives the output.

The signal makes this journey, from input to output, on a path determined by connections. It can be a direct path or that forwards the signal from the last layers to the initials, the structure of the connections will depend on the work. And very complex structures can make very abstract works.

The brain works in a similar way: there are neurons that receive the input (seen, heard, etc. ), certain neural groups are activated for each type of work and others that cause the output (reaction). But the brain is much more complex than neural networks of computing. Among other things, the number of neurons and connections increases as you learn, at least at the beginning of life.

Trial and error

The more abstract the work, the more complex the network that learns to do so. It seems impossible, for example, to design a neural network that separates printed letters (characters). But these networks are common: They are called OCR software. How did computer scientists design them? How did they know the number of neurons to use, the number of layers to organize and what connections to establish?

The answer is simple: at first they did not know. To carry out this type of work, they choose an initial neural network that will make you learn. It is very possible that the network cannot learn, that it does not remain once started or that it gives meaningless results. In these cases, they adjust the network, adding or eliminating neurons, restructuring the network itself or modifying the transformation performed by each neuron. And they try again.

Finally, when he is able to learn the network, he is willing to work in the 'real world'. And then the work of the network must also be adjusted, as the learning process is done through concrete examples. And the real world has many different cases.

Gai honi buruzko eduki gehiago

Elhuyarrek garatutako teknologia