It's time for machines to take into account human diversity and inclusion.

2022/01/16 Agirre Ruiz de Arkaute, Aitziber - Elhuyar Zientzia Iturria: Elhuyar aldizkaria

Researchers are making a huge effort so that machines can easily identify humans and maintain increasingly effective communication with them. Continuous improvement of interfaces has been reported by researchers at the University of Purdue (ESAs) in the journal Science for continuing to disregard human diversity. Not only in terms of ethnicity, but also in terms of social class and skills. They say that the exclusion of technology is still being encouraged.

Human diversity must be taken into account when designing research, otherwise interfaces reproduce the same prejudices and errors as researchers. Therefore, research groups on the relationship between machines and human beings have highlighted the need for scientists capable of coping with cultural hegemony. Otherwise, machines will not be integrated into our natural, virtual, psychological, economic or social environment.

However, in most cases, researchers use citizens txuris of high socioeconomic level in the process of developing interfaces and, therefore, have found that many groups of them are outside these technologies.

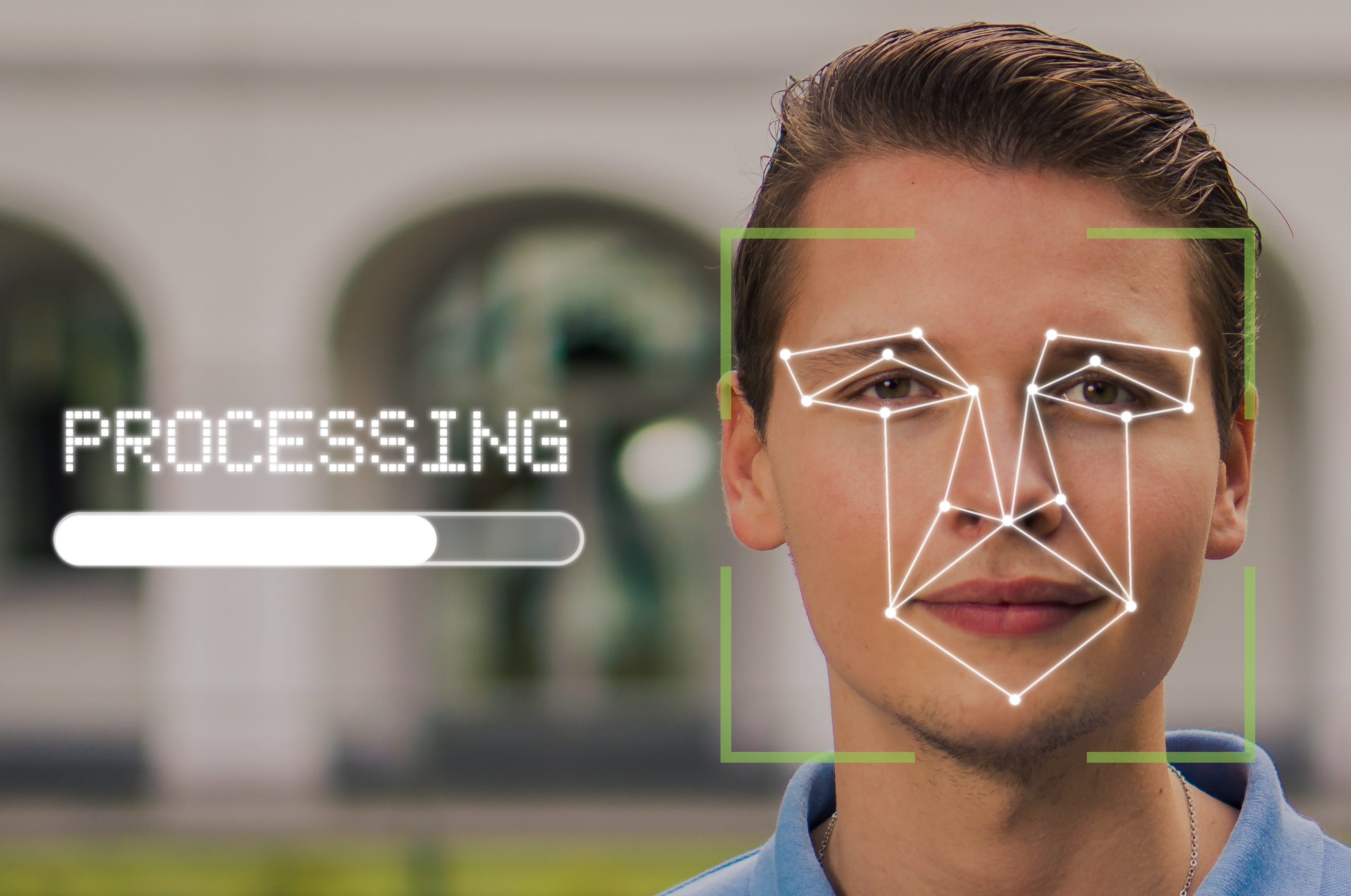

For example, interfaces constantly make errors in data recording and interpretation. Not only when people actively interact with machines (e.g. with an autonomous vehicle), but also in the case of users aware of the interaction but passive (as with devices taking data for medical diagnosis), or in those cases where they are unconscious and also become unauthorised users of technology (technologies used by the police for face analysis).

The consequences are serious: when training skin disease identification interfaces with white skin people, there are great errors in dark skins; facial recognition algorithms do not properly identify racialized people; and a long and so on.

Therefore, in interface training processes researchers have been asked to finally integrate low-income citizens, ethnic minorities, migrants and people with functional diversity.

Gai honi buruzko eduki gehiago

Elhuyarrek garatutako teknologia