Genes, neurons and computers

2015/01/01 Azkune Galparsoro, Gorka - Ikertzailea eta irakasleaEuskal Herriko Unibertsitateko Informatika Fakultatea Iturria: Elhuyar aldizkaria

Nature as a source of inspiration

Artificial intelligence concept XX. It appeared among us around the middle of the 20th century. In its beginnings it begins to work with symbolic intelligence and search problems. A good example were artificial chess players. IBM's prestigious computer, Deep Blue, was the most successful when it won the prestigious chess player Gary Kasparov in 1997.

These machines, however, did not look like man's intelligence. They were able to do very well one concrete thing, but not another. They did not study, they were unable to generalize their knowledge and, if they slightly changed the rules of the game, they could not react. Therefore, the researchers worked on new paths and worked in a new field of artificial intelligence: machine learning.

In this article we will not fully analyze machine learning. We will enter a specific but attractive area: algorithms inspired by nature and learning. We will approach a process very similar to human learning, combining neural networks and genetic algorithms.

Neural networks

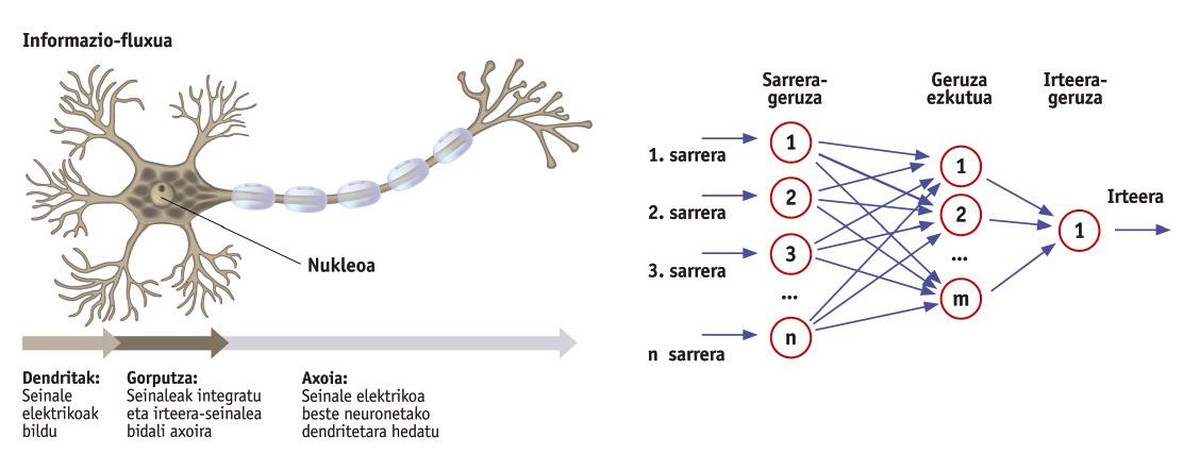

Our brain is made up of neurons, a stack of interconnected neurons. But if we only analyze one of these neurons we will be surprised by its simplicity. From the information point of view, neurons receive a series of input data in the form of an electrical signal and eject another signal (Figure 1). Therefore, the processing capacity of our brain is based on the interaction of these simple neurons.

In the mind of this idea, it does not seem very difficult to build artificial neural networks. And it is not difficult. The first artificial neural networks developed between the 40s and 60s of the last century. However, its potential was not apparent until the late 1980s. Currently, neural networks can be found in eleven applications.

Neural networks are a set of neurons that convert some data inputs into data outputs. As can be seen in figure 2, neural networks are organized into layers: input layer, hidden layer (you can hide as many layers as you want) and output layer. Each layer is formed by neurons (figure rolls) and all neurons in a layer are connected to the neurons in the next layer. This is the structure of a conventional neuronal network. Keep in mind that networks of all kinds can exist to meet different objectives. For example, some networks connect the neurons in the output layer to the hidden layers so that the network has memory. But, for the moment, let's put aside complex structures.

The inputs of each neuron are numbers. When these numbers reach a neuron, they multiply by other numbers called weights and then add all the results. This sum will be the output of the neuron. As you can see, what each neuron does is very simple. But the entire network, connecting all neurons, is able to implement any mathematical function. This is the power of the network. What we have just said can be demonstrated mathematically, but that is beyond the scope of this article.

Therefore, in the behavior of a neural network, its structure (number of layers and nature of the connections) and the weight of each neuron are particularly important. To perform simple operations it is sufficient a small network in which all weights are easily fixed and the appropriate structure is determined. However, complex applications require large networks in which it is practically impossible to manually determine all the weights of each neuron. Therefore, it is essential that the neural network itself establish the values of these weights through an automatic process. This process is called machine learning and can be carried out in various ways. We will see reinforcement learning here. First of all, we must analyze genetic algorithms.

Genetic algorithms

Darwin's theory of evolution taught us how the evolution of living beings occurs. Those who best adapt to the environment are those who are most likely to fertilize and fertilization combines the characteristics of parents and opens the way to the new generation. By combining the characteristics of individuals that best adapt, from generation to generation, better individuals are born. Genetic algorithms use these ideas to optimize complex problems.

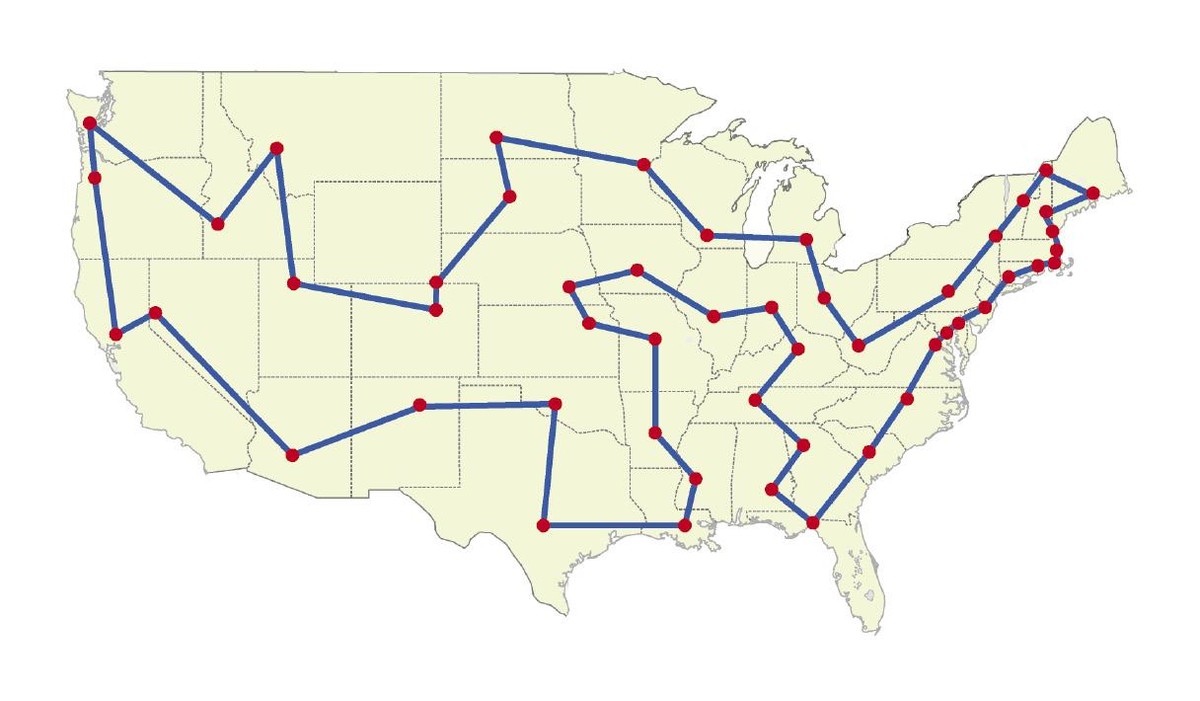

Let's analyze this problem. A traveler wants to visit n cities, but not in any way. To save money, he wants to make the shortest way through these n cities (Figure 3). Considering that we know the distances between all cities, how can we plan the trip? It seems simple: let us calculate all the orders of visit of the cities, add the distances and take the smallest. This solution is correct, but when n is a large number of cities, a computer takes a lot to find the solution. Therefore, it is not a practical solution.

Genetic algorithms can work well with the traveler's problem. How? First the initial generation is created and random combinations of n cities are created. These random combinations are called individuals. The distance traveled by each individual is calculated, leaving the algorithm with a few that provide the smallest distances to cross it. In the case of cities, for example, we can combine the first n/2 cities of an individual with the n/2 of the second and create a new individual of n cities. The individuals resulting from the crosses form a second generation.

Another important factor is mutation. As in nature, new individuals can be born with mutations. In our case, the exchange between two cities in random positions can be matched to a mutation. Mutation is rare but has a very important function to find more suitable individuals.

As the algorithm generates new generations, individuals provide better solutions. In the end, although it is not always possible to find an optimal solution, the best individual is very close to the optimum. So in a short time you can find a very good solution. Isn't it surprising?

Learning by reinforcement

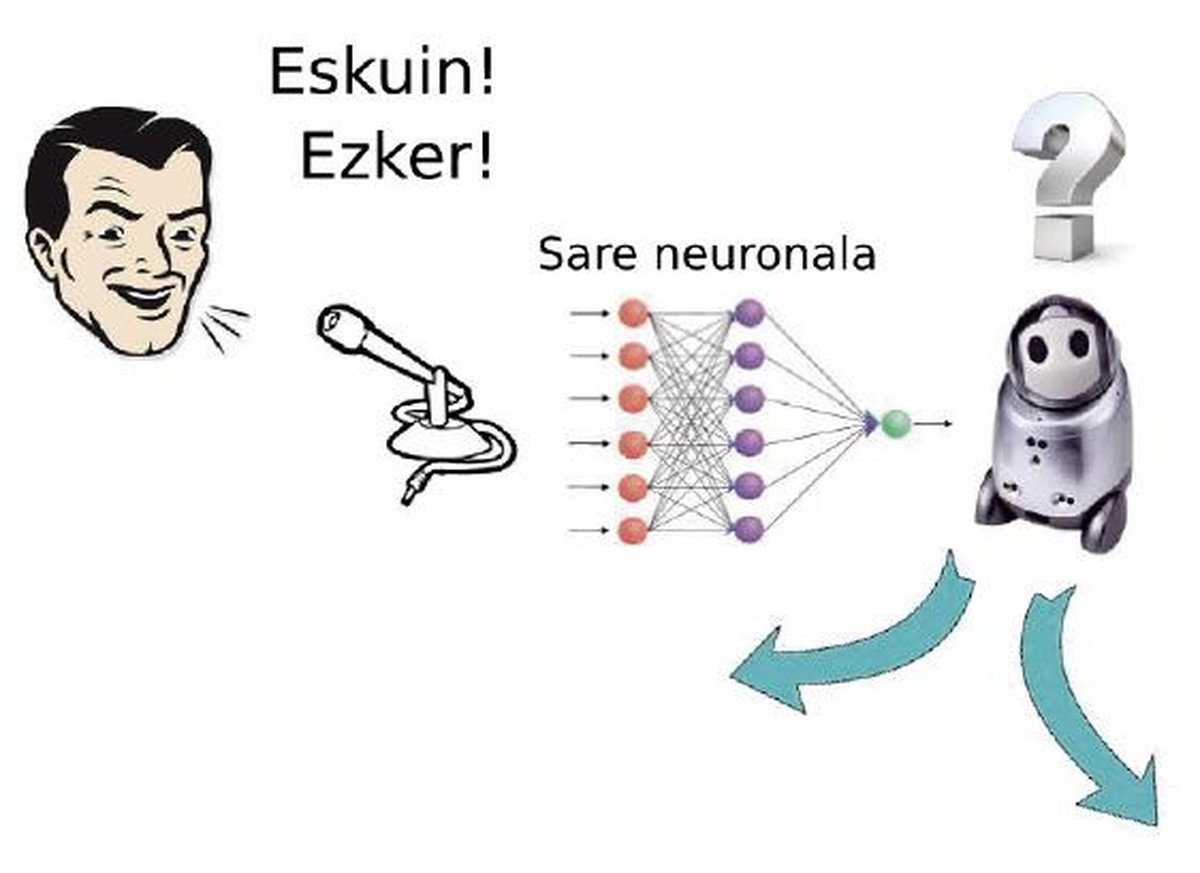

Now comes the nicest. Imagine that we have a robot that can move in any direction. We want to show that when we say “right” we go to the right and when we say “left” we move to the left. To do this we put a microphone that detects our voice. The signal generated by the microphone is the input of a neural network. The output refers to the signals of the motors used to move the robot (Figure 4).

The goal of the robot is to obtain the weights of neurons that form the neuronal network to do well what we have said. Among all possible values of all weights there are solutions that respond to the vocal stimulus with an appropriate movement. For this we will use reinforcement learning. We will give the robot a “right” or “left” order and depending on the movement you make we will put a note from 1 to 10. If you say “right” and move left we will put 1. But if you move forward doing it a little to the right, we still put a 5. Of course, when you move right, you will have a 10!

The learning process works as: first, the genetic algorithm selects random solutions, i.e., neural network specific weights. Run these solutions and detect the best based on the prize received. Cross the best floors, sometimes apply the mutation and try again with the new generation. With the new awards received a new generation will be created that will allow you to find a solution with the most powerful awards for all voice commands.

These experiments have already been performed and this technique has been proven to work. But that is not magic, but mathematics. ?An iterative process of searching for the most suitable parameters of a non-linear function is really taking place. In our opinion, we look for neural network weights in an optimization process in which the objective is to maximize the prize obtained.

To finish

Reinforcement learning is based on learning processes of humans and animals. In addition, here we have shown how to develop this process using neural networks and genetic algorithms. Both have their basis in nature. It is fascinating to see that we can train machines to learn. Perhaps it is more fascinating to know that this ability to learn has been achieved by imitating processes that take place in nature itself, which demonstrates the depth of knowledge of nature that we have acquired through science for many years. We have been able to combine psychology, biology, neurosciences, mathematics and computer science for machines to learn.

We currently have several examples of machines capable of learning. When we shop online and value products, we automatically get new tips because the systems behind us learn from us. Web browsers also use learning to deliver personalized searches. Through the camera we can know the faces, drive the cars autonomously and put many examples of the success of learning and artificial intelligence.

The road is still long. We have only begun. Although artificial intelligence and machine learning have evolved a lot, we are still far from what a human being can do. But we move forward.

Bibliography

"Esta entrada #Scholarly Culture 3. Take part in the festival"