How do you prepare coffee?

2017/03/01 Azkune Galparsoro, Gorka - Ikertzailea eta irakasleaEuskal Herriko Unibertsitateko Informatika Fakultatea Iturria: Elhuyar aldizkaria

In our day to day we do many things: getting up from the bed, having breakfast, watching TV, etc. The capacity of realization of each of these activities is fundamental for a good quality of life. And that is precisely the goal of smart homes: to provide the necessary support to people who live in them to carry out their daily activities.

But what are smart homes? At the base are normal houses, where sensors and computers are placed. Sensors provide information about the activities carried out by people and computers process this information to understand behaviors and make decisions. In this article we will analyze the first part, compiling the information of the sensors and detecting human activities.

To start with, we need sensors. There are many types of sensors on the market and we would not be able to explain them one by one. For this work, let's think that the sensors are stuck in our everyday objects and tools. Thus, for example, when taking a glass the sensor in it will be lit and this action will be recorded. Collecting information of this type in time, the computer must know the activities. For example, if a person takes a cup, launches the coffee maker and then takes the sugar bowl, the computer should know that the person is preparing a coffee.

How to detect human activities?

If we look at the research carried out to date, we can find two main currents for the detection of human activities:

- Data-based techniques: taking sensory data collected by a person, applying machine learning and learning of human activities. The computer learns from raw data. These techniques have many positive aspects, such as being able to learn personalized practices, which learn from each person's data, and adapt to people's changes. But there are also cons: difficulties in generalizing what you have learned — you cannot use what you have learned from one person to another — and many labeled data, for example, are needed in the learning phase. The latter is an important problem since it is very difficult to obtain tagged data.

- Techniques based on knowledge: the knowledge we have of each activity is encoded in some logical models and then it is observed if the information of the sensors is coherent with those models to find an adequate activity. Advantages: The defined models are applicable to anyone, without the need for data to launch the system (there is no learning phase). Cons: getting personalized models is very difficult, as it is difficult to know in advance all the details of each person. On the other hand, the performance models are rigid and cannot be adapted to the changes that people experience over time.

Deepening the pros and cons of both currents, it is quite clearly seen that they present opposing characteristics. What the techniques based on data do well, the techniques based on knowledge cannot do well, and vice versa. These are two different approaches to solving the same problem, which are contradictory, but are they incompatible?

In search of hybrid techniques

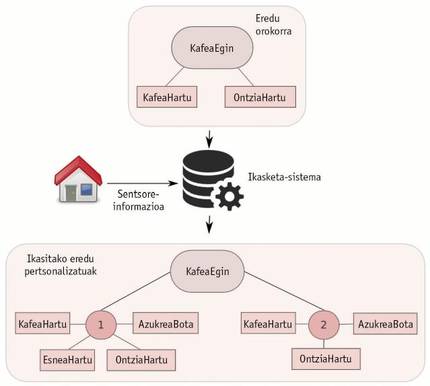

It would be good for the two currents to somehow gather and merge the best of the two worlds, wouldn't it? This is what has been done in this thesis. A new process of modeling human activity has been proposed, developed and tested. Figure 2 shows the diagram of this new process. The proposal combines techniques based on knowledge and data, offering a hybrid solution. First, an expert defines general performance models applicable to anyone. Below is the sensory information generated by a person who lives in a smart home. Using general models and a data-based learning algorithm, the initial models are enriched by learning the details of that particular person. This way, personalized models are learned. These models are presented to the expert to incorporate them into the knowledge base.

In this way, a system has been created that connects the best characteristics of both currents. On the one hand, human knowledge is used to create general models. These general models collect the general characteristics of an activity, so they are applicable to anyone. On the other hand, it is capable of learning custom models, collecting sensory information from a person and applying data-based algorithms. In addition, the use of general models in this learning avoids the need for tagged data, thus overcoming a negative aspect of data-based techniques. Thus, as a person's behavior changes, the personalized models that are learned are adapted.

With a better example

Let us try to better understand the proposal presented in Figure 2 with a simple example. Take the activity of making coffee. As we all know, to make coffee it is necessary to drink coffee and have a bucket to drink. Therefore, the coffee activity will have two mandatory actions: drink coffee and take the container. As seen, an activity is divided into actions. We have just defined a general model by composing two actions. This model is applicable to anyone, since no one in the world is able to make a coffee without coffee or glasses (figure 3).

Now, suppose the person living in the smart house usually cook coffee in two ways: sometimes prepare coffee with milk, coffee, milk, container and sugar; sometimes prepare coffee only with coffee, container and sugar. The process proposed in the thesis studies these personalized models from general models and sensor data. We must insist that these sensor data are not labeled, thus overcoming the weakness of the techniques based on the most used data.

Custom model learning algorithm

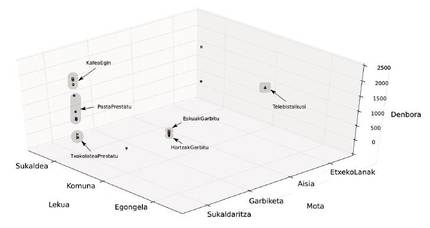

It is not the subject of this article to accurately describe the learning algorithm developed in the thesis, but let's try to explain the main ideas (Figure 4). The actions of a person can be framed in three axes:

- Place where the person has carried out the action: kitchen, bathroom...

- Object of the action (type): cleaning, kitchen, leisure...

- Time (day and time) when the action has taken place.

These actions are perceived by sensors. Therefore, if, as a person is performing their daily activities, we draw on these three axes the detected actions, we realize that the activities can be described by collecting actions close to each other.

Therefore, the learning algorithm developed in the thesis:

Groups actions close to the space of activities in different groups (this is called cluster).

From general models, each of these groups invents the activity to which it belongs. For this purpose, it is observed whether the general models are coherent with these action groups.

It encompasses all action groups for an activity and finds common evolutions, learning custom models.

In this way, specific performance models are learned for each person, learning all the actions taken by each person. In addition, if this learning process is repeated over time, as new data is being collected, the evolutions that a person can experience at that time and learn adequately the evolution of that person can be detected. That is, you can learn the changes that a person can have in the way of performing the same activities, learning concrete actions.

Conclusions of the conclusions: Conclusions

Why are custom models so important? On the one hand, because they allow each person to provide the help they need. For example, if a person always adds sugar to the coffee (personal model) and at some point it is seen that he has not thrown sugar, it can be remembered. The smart home will adapt better to people who live in it.

On the other hand, personalized models are important for their potential use in health. Geriatricians and neurologists have shown that changes in daily activities can prediagnose mental illnesses. The techniques developed in this thesis can be a way to accurately analyze these evolutions and changes. Therefore, the study of personalized activities over time can help a lot to fight these diseases, since before showing other medical symptoms we can begin to treat the disease.

In the future we will have to continue working to solve the problems that remain in the air. How to extend this type of solutions to more real situations? That is, are there many people living in the same house and doing joint activities? How can we avoid placing a sensor in every corner and object of the house without renouncing the details of the activities?

There is still much to do in human activity detection systems, but we believe it is worth it because the benefits they can report could be important. We could be facing a substantial way of improving people's quality of life and we should take advantage of it.

Bibliography Bibliography Bibliography

Gai honi buruzko eduki gehiago

Elhuyarrek garatutako teknologia