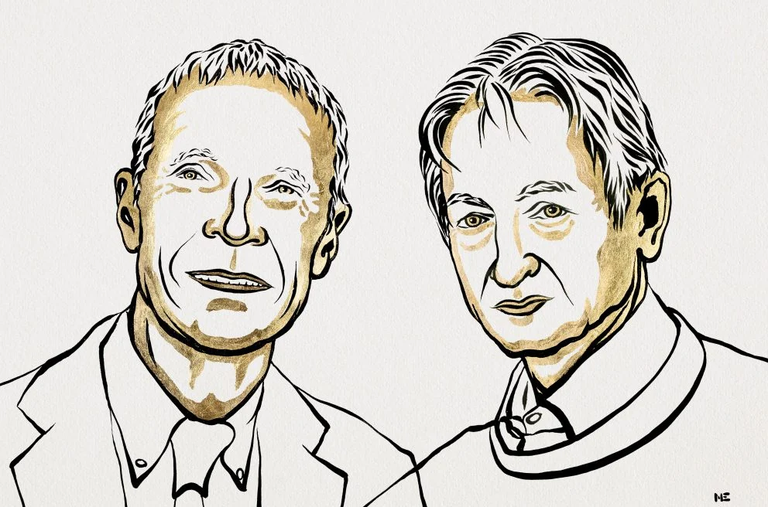

Nobel in Physics for those who developed the base of artificial intelligence systems.

John J. Hopfield and Geoffrey E. Researchers Hinton will receive the Nobel Prize in Physics for their discoveries based on artificial neural networks, essential for the development of machine learning systems.

According to the Nobel Academy, this year’s winners have used the tools of physics to develop methods that are the basis of today’s powerful automatic studies. John Hopfield created an associative memory that can store and reconstruct images and other types of patterns as data. Geoffrey Hinton, for his part, invented a method that can find properties in the data autonomously, allowing him, for example, to identify specific elements in the images.

Behind what is called artificial intelligence, machine learning is usually found through artificial neural networks. In its beginnings, this technology was inspired by the structure of the brain. Somehow, in a network of artificial neurons, brain neurons are represented by nodes, and synapses are represented by weak or strong connections. The network is also being trained, creating stronger connections between some nodes and, among others, weaker ones.

Actually, this analogy came from before, since the 1940s. In the 1960s, however, some theoretical studies suggested that this route would not be successful. However, physics also gave the key to understanding and using new phenomena.

Hopfield network and Boltzmann machine

In particular, in 1982 John Hopfield created a network based on associative memory. Specifically, it created a method for storing and recreating patterns. In fact, he observed that systems that have many small elements that interact with each other can generate new phenomena. In particular, he took advantage of what he learned from magnetic materials, which thanks to their atomic spin have special characteristics. Hopfield created its method from the interaction of the spines of the surrounding atoms. Thus, when the Hopfield network feeds on an incomplete image, the network updates its values and is able to find the saved image and create a complete image.

When Hopfeld published an article on associative memory, Geofrey Hinton was working at Mellon University, at Carnegie Pittsburgh (EE.UU. ). I had previously studied experimental and artificial psychology and wanted to know if machines, like people, could learn to process patterns. Together with his teammate Terrence Sejnowski, he started working with Hopfeld's network, and from there, he created a new method using the ideas of statistical physics. This method was published in 1985 under the name of Boltzmann Machine.

The Boltzmann machine is usually used with two types of nodes. One of them (spectacular nodes) feeds on information and the other forms a secret layer. The values and connections of the hidden nodes participate in the energy of the entire network. The machine works by establishing a rule of simultaneous updating of nodes values. In fact, Boltzmann is the antecedent of the models of creative machines. And although in the 1990s researchers lost interest in artificial neural networks, Hinton continued to improve his method and other researchers took it as a base. Through pre-training, they've made it much more efficient.

Hopfeld and Hinton put the pillars on which to underpin the amazing development of machine learning systems today, and that's why the Nobel Committee on Physics has decided to award them. Examples cited by the Commission include, for example, the forecasting of the molecular structure of proteins or the development of materials for the creation of more efficient solar cells.

Buletina

Bidali zure helbide elektronikoa eta jaso asteroko buletina zure sarrera-ontzian